Juggling Coordinator Settings in Lambda Complex

This past month I finally found the time needed to finish up, roll out, and smooth off some corners on Lambda Complex. This is a fairly lightweight framework to ease the creation and deployment of applications that run entirely within AWS Lambda, SQS, and other higher level abstraction layer services. It has a small devops footprint since there is no need to set up servers, and for some of us at least this is the primary incentive to use Lambda. With the right approach it can abstract away a lot of otherwise tedious and necessary work.

Lambda Complex is a good fit for message-queue-based applications where the designers care about concurrency limits on various operations, and appreciate the ability to monitor and alert on application state via queue attributes such as the count of messages pending consumption. The framework was originally started with non-real-time static web content generation in mind, but it has many other possible uses.

A Brief Overview

The Lambda Complex documentation is the best place to start for an overview of how it works:

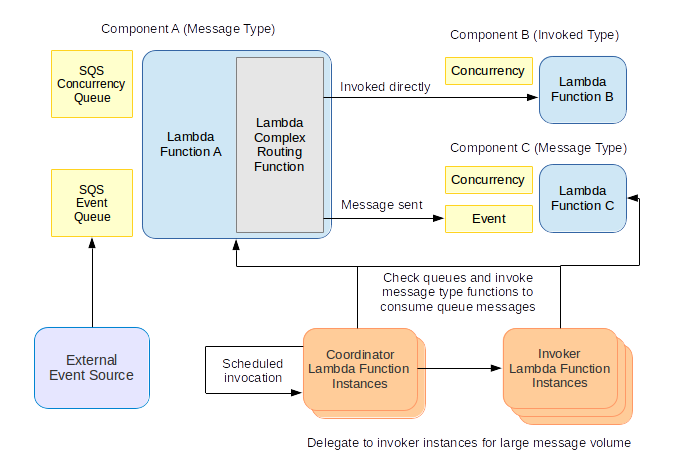

In short, an application is constructed from components, each of which consists of a Lambda function, a concurrency-tracking queue, and possibly an event queue. The framework for the application includes routing function definitions that determine destinations for the output of each Lambda function invocation. In this way invocations of different functions can be chained together, with data passing either directly or via message queues.

The Coordinator

The coordinator Lambda function sits at the center of Lambda Complex. One or more instances of the coordinator are always running to (a) check the state of the application via its queues and (b) invoke application Lambda functions when event queues contain messages to be consumed. Accordingly, the coordinator has its own section in the Lambda Complex configuration:

coordinator: {

coordinatorConcurrency: 2,

maxApiConcurrency: 10,

maxInvocationCount: 20,

minInterval: 10

},

The subject for this post is how to get the most out of the coordinator configuration for your application; bear in mind that this was written for Lambda Complex version 0.6.0.

coodinatorConcurrency

The coordinatorConcurrency property sets the number of coordinators that run at one time, each handling a fraction of the overall need to invoke other Lambda functions. This should be set to at least 2 to help ensure that an unforeseen error doesn't completely stop the application from running: until such time as scheduled events for Lambda make it to the APIs and CloudFormation, Lambda Complex is reliant on coordinators invoking themselves in series, leaving them vulnerable to the unknown, such as a behind-the-scenes disruption of service. If a coordinator finds that too few coordinators are running, it will launch more, so running more than one at once is a form of insurance.

Unless you are guaranteed a continual flood of events arriving in SQS queues, there really is no reason to have any more than the bare minimum of coordinators: the run continually, and count towards cost and limits. If faced with a sudden spike to queue messages and invocations, then a coordinator will delegate to as many invoker instances as are required to issue the API requests while remaining within the other coordinator limits.

maxApiConcurrency

The coordinator issues AWS API requests at the given concurrency. This includes checking on queue attributes and invoking other Lambda functions. It is fairly safe to run 10-20 concurrent requests via the AWS SDK for Node.js from a single Node.js process, but going much beyond that is asking for trouble. Within the AWS infrastructure requests resolve much more rapidly than when running on a local machine, but there is still a definite limit as to what you can get away with in one process without incurring an ever greater number of network errors.

The maxApiConcurrency versus the number of components in the application adds to the minimum time taken for a coordinator to execute. If you have 20 components, of which 10 are of the event from message type and thus have event queues in addition to concurrency queues, then 30 API requests are needed to count the number of visible messages. With a maxApiConcurrency of 10 those checks are probably going to require a few seconds to clear through.

maxInvocationCount

The maxInvocationCount property puts a limit on how many other Lambda function invocations a coordinator or invoker can carry out. Any more than the limit are delegated to other invoker instances. Both coordinators and invokers have the standard maximum time limit for Lambda function execution, which currently stands at 300 seconds. It is perfectly possible to set the maximumInvocationCount high enough to cause a timeout given a large enough set of queue messages to process.

The idea here is to set a reasonable maxInvocationCount that, in conjunction with maxApiConcurrency, leads to a maximum expected execution time for a coordinator that is lower than the minInterval value. The best mode of operation is to have frequent invocation of coordinators to pull new messages from the queues in a timely fashion, and to do this the number of invocations made by any one coordinator instance has to be fairly low. Again, if a large number of invocations has to be made, then the coordinator will launch invoker instances. For example if maxInvocationCount is 20 and there are suddenly 400 queue messages to process, then the running coordinators will collectively launch 20 invoker instances to do that job, and only take a few seconds to do it in.

minInterval

The minInterval property is the length of time in seconds that a coordinator must wait if done before launching its next invocation. Assuming the other values are set to ensure a fairly speedy completion for a coordinator even under load, then minInterval combines with the concurrency settings for individual components to determine the pace of the application. The longer the minInterval, the slower the application reacts to new messages and the slower messages are processed overall.